#Installing Tensorflow-GPU on virtual environment

Explore tagged Tumblr posts

Text

Intel Geti Platform: Next-Gen Computer Vision AI Software

What is Intel Geti Software?

Intel’s latest software can create computer vision models with less data and in a quarter of the time. Teams can now create unique AI models at scale with to this software, which streamlines time-consuming data labeling, model training, and optimization processes throughout the AI model creation process.

Create Robust AI Models for Computer Vision

Small data sets, active learning, an easy-to-use user interface, and integrated collaboration make training AI models straightforward.

Automate and Digitize Projects More Quickly

Teams can rapidly create vision models for a variety of processes, such as identifying faulty parts in a production line, cutting downtime on the factory floor, automating inventory management, or other digitization and automation projects, by streamlining labor-intensive data upload, labeling, training, model optimization, and retraining tasks. The Intel Geti software simplifies the process and significantly reduces the time-to-value of developing AI models.

Core Capabilities Behind the Next-Generation Computer Vision AI Software

Interactive Model Training

Use as little as 20–30 photos to begin annotating data, and then use active learning to train the model as it learns.

Multiple Computer Vision Tasks

Build models for AI tasks such as anomaly detection, object identification, categorization, and semantic segmentation.

Task Chaining

By chaining two or more tasks together, you may train your model into a multistep, intelligent application without writing extra code.

Smart Annotations

Use professional drawing tools like a pencil, polygon tool, and OpenCV GrabCut to quickly annotate data and segment pictures.

Production-Ready Models

Produce deep learning models as optimized models for the OpenVINO toolkit to operate on Intel architecture CPUs, GPUs, and VPUs, or in TensorFlow or PyTorch formats, if available.

Hyperparameter Optimization

The model’s learning process depends on the hyperparameters being adjusted. The work of a data scientist is made simpler by Intel Geti software’s integrated optimization.

Rotated Bounding Boxes

The accuracy and ease of training are extended to datasets with non-axis-aligned pictures with to support for rotated bounding boxes.

Model Evaluation

Assessment of the Model thorough statistics to evaluate the success of your model.

Flexible Deployment Options to Get You Started

Simply set up your environment and infrastructure and prepare to install Intel Geti software, regardless of whether you want to use your system infrastructure inside your network or benefit from the cloud virtual machine without managing infrastructure.

On Premise

Virtual Machine

Enabling Collaboration that Adds Value

In a single instance, cross-functional AI teams work together to examine outcomes instantly. Team members with little to no familiarity with AI may assist in training computer vision models with to the graphical user interface. Drag-and-drop model training is made easy by enabling features like object identification helpers, drawing features, and annotation assistants.

Intel Geti Platform Use Cases

Convolutional neural network models are retrained using the Intel Geti platform for important computer vision applications, such as:

Semantic and instance segmentation, including counting

Single-label, multi-label, and hierarchical classification

Anomaly classification, detection, and segmentation

Axis-aligned and rotational object detection

Task chaining is also supported, allowing you to create intelligent, multi-step applications.

Manufacturing: Create AI for industrial controls, worker safety systems, autonomous assembly, and defect detection.

Smart Agriculture: Create models for self-governing devices that can assess crop health, detect weeds and pests, apply spot fertilizer and treatment, and harvest crops.

Smart Cities: Create AI-powered traffic-management systems to automatically route traffic, create emergency-recognition and response systems, and utilize video data to enhance safety in real time.

Retail: Create AI for accurate, touchless checkout, better safety and loss prevention, and self-governing inventory management systems.

Video Safety: Create task-specific models for the identification of safety gear, PPE, social distancing, and video analytics.

Medical Care: Create models to help with diagnosis and procedures, assess lab data and count cultures, identify abnormalities in medical pictures, and expedite medical research.

REST APIs and a software development kit (SDK) may be used to incorporate all of these models into your pipeline, or you can use the OpenVINO toolkit to distribute them.

FAQs

What types of data can Intel Geti handle?

Text, photos, videos, and structured data are just a few of the data kinds that Intel Geti can manage. It excels in processing unstructured data, such as audio and visual inputs for deep learning applications.

Read more on govindhtech.com

#IntelGetiPlatform#NextGenComputer#VisionAISoftware#AImodels#ComputerVision#CreateRobustAIModels#DigitizeProjects#OpenVINO#deeplearning#CoreCapabilitiesBehind#data#IntelGeti#technology#TensorFlow#virtualmachine#Convolutionalneuralnetwork#technews#news#govindhtech

0 notes

Link

In this tutorial, you will learn about cuda installation guideline and GPU Computing - Configuring GPU Tensorflow on Ubuntu and Guideline for installation cuda 9.0 Toolkit for Ubuntu.

#ai#cuda installation guideline#Disabling nouveau#Installing Tensorflow-GPU on virtual environment#cuda is the programming language

0 notes

Text

Testing Pre-trained Models Part One: im2txt

Now that I had gained a reasonable amount of knowledge on how machine learning models are developed, through my experimentation with TensorFlow using Jupyter Notebook. I then moved onto testing pre-trained image captioning models so I could finalise which one I would be using for my project. However, this was more difficult than I thought as there was not many pre-trained open source image captioning models available. It seems that there was a surge of interest in image captioning around 2015 by researches due to the release of large-scale image captioning datasets such as MS C0CO and Flicker30k, also in 2015 the first image captioning competition was held called the MS C0CO Challenge, however the interest in captioning since then seems to have died down. This made it more difficult to find models however, in the end I found two well-known image captioning models that were open source. The first model is a TensorFlow implementation of a model called Show and Tell which was created by google in 2015. This model tied for first in place in the MS COCO challenge in 2015, the other model I found is called DenseCap which was created in 2016. This model takes a unique approach to image captioning as rather than trying to generate a one sentence caption for an image it instead generates captions for the different objects within the image.

The TensorFlow implementation of Show and Tell is called im2txt, it is trained on the same dataset and a similar architecture as the original Show and Tell model. To get the model working the following libraries are required, Bazel, Python, Tensorflow and Natural Language Toolkit (NLTK). Installing Bazel was rather straight forward as I used the package manager Homebrew however when testing that the library had installed correctly, I was receiving an error this was because I needed to accept the xcodebuild license to use Bazel. For Python the version needed was 2.7 therefore I created a conda virtual environment for this version of python.

As for TensorFlow I already had this installed however as TensorFlow was installed on another virtual environment this meant that I had to install TensorFlow again. Furthermore as this model was released in 2017 this meant that it was not compatible the latest version of TensorFlow which is version 2, instead it is only compatible with TensorFlow version 1. Therefore, inside the conda virtual environment for python 2.7 I installed TensorFlow version 1.0. As for Numpy I already had this installed this just left NLTK which was split into two parts. Firstly, I had to install NLTK itself then I had to install the package data for it which was a relatively simple process. However, I did have some trouble installing the package data as I tried using the NLTK GUI installer which caused my computer to crash, therefore instead I had to use the command line installation.

I now had all prerequisites for im2txt installed however the problem with this model is that is does not come with an official pre-trained model, instead you either have to train the model yourself or use existing pre-trained weights that were trained by someone else. As this model would take weeks to train and required a high spec GPU it was not logical to train this model myself therefore, I decided to use weights that other people had trained. Finding pre-trained weights for this model was quite easy however getting the weights to work on my machine was quite difficult. To get im2txt to work a checkpoint file that contains all the weights generated during training is required as well as a text file containing the vocabulary that was generated by the model during training. I downloaded a few different checkpoint and vocabulary files and tested them however none of them worked as I was getting a range of different errors.

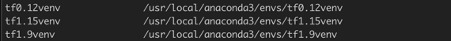

The problem was that the pre-trained weights that I found were all trained on different versions of TensorFlow and Python. Therefore, when I tried to run them on my versions of Python and TensorFlow they did not work. To solve this problem, I created different virtual environments that had the same versions of Python and TensorFlow that the checkpoint file was trained on. In the end I created three different virtual environments each with a different versions of TensorFlow and Python.

For example, the first virtual environment was used to test an im2txt checkpoint which had been trained using TensorFlow 0.12 and python 3.5. By using a virtual environment which used these specific versions of TensorFlow and Python I was able to get the model to work. However, once I ran the model on one of my images the result were not what I was expecting instead the model just produced a single word for the image instead of a caption.

For example, for the image above the model would generate this instead of a complete caption.

I also tested the model on images that are part of the MS COCO dataset which is the dataset this model has been trained on and the results were still the same. I also tested all of the pre-trained im2txt checkpoint files that I had downloaded, and the results were the same. I am not sure as to why the model was behaving like, I thought it might be that the model does require some initial training however that was not the case. Instead I suspect that perhaps one the prerequisite libraries did not install correctly or perhaps the version of one of the library’s was causing the model to not work as intended. As the model was essentially performing just image recognition on the images instead of generating a caption, I think that the Natural Language Toolkit library may have been causing the problem.

Even though this model was not working I did not want discard it straight away therefore I tried to see if there was docker image implementation available, which there thankfully was. Using docker meant that I did not have to worry about installing the additional library as docker will take care of that. I firstly installed docker and then downloaded the im2txt docker image from the docker hub. Getting the model to work using docker was fairly straight forward as all that was required was the pre-trained weights and a vocabulary file which could both be downloaded using docker. I then run the script for generating a caption and passed in the same image that I had previously used. However now the model was working as it was now generating a complete caption instead of just a single word.

Caption generated using im2txt docker image:

0 notes

Text

Bringing Artificial Intelligence to the Browser with TensorFlow.js

TensorFlow.js allows web developers to easily build and run browser-based Artificial Intelligence apps using only JavaScript.

Are you a web developer interested in Artificial Intelligence (AI)? Want to easily build some sweet AI apps entirely in JavaScript that run anywhere, without the headache of tedious installs, hosting on cloud services, or working with Python? Then TensorFlow.js is for you!

This paradigm-shifting JavaScript library bridges the gap between frontend web developers and the formerly cumbersome process of training and taming AIs. Developers can now more easily leverage Artificial Intelligence to build uniquely responsive apps that react to user inputs such as voice or facial expression in real time, or to create smarter apps that learn from user behaviour and adapt. AI can create novel, personalized experiences and automate tedious tasks. Examples include content recommendation, interaction through voice commands or gestures, using the cellphone camera to identify products or places, and learning to assist the user with daily tasks. As the ever-accelerating AI revolution works its way into smart frontend applications, it might also be a handy skill to impress your boss or prospective employers with.

In Part I of this series we will briefly summarize the advantages of Tensorflow.js (TFJS) over the traditional backend-focused Python-based approaches to show you why it’s such a big deal.

Artificial Intelligence, Liberated

In the past, many of the best Machine Learning (ML) and Deep Learning (DL) frameworks required fluency in Python and its associated library ecosystem. Efficient training of ML models required the use of special-purpose hardware and software, such as NVIDIA GPUs and CUDA. To date, integrating ML into JavaScript applications often means deploying the ML part on remote cloud services, such as AWS Sagemaker, and accessing it via API calls. This non-native, backend-focused approach has likely kept many web developers from taking advantage of the rich possibilities that AI offers to frontend development.

Not so with TensorFlow.js! With these obstacles gone, adopting AI solutions is now quick, easy, and fun. In Part 2 of this series, we’ll show you just how easy it is to create, train, and test your own ML models entirely in the browser using only JavaScript!

Besides allowing letting you to code in JavaScript, the real-game changer is that TensorFlow.js lets you do everything client-side, which comes with a number of advantages:

Apps are easy to share

Provide the user with a URL, and voilà, they are interacting with your ML model. It’s that simple! Models are run directly in the browser without additional files or installations. You no longer need to link JavaScript to a Python file running on the cloud. And instead of fighting with virtual environments or package managers, all dependencies can be included as HTML script tags. This lets you collaborate efficiently, prototype rapidly, and deploy PoCs painlessly.

The client provides the compute power

Training and predictions are offloaded to the user’s hardware. This eliminates significant cost and effort for the developer. You don’t need to worry about keeping a potentially costly remote machine running, adjusting compute power based on changing usage, or service start-up times. Forget about load balancing, microservices, containerization or provisioning elastic cloud compute capabilities. By removing such backend infrastructure requirements, TensorFlow.js lets you focus on creating amazing user experiences! However, we must still take care that the client’s hardware is powerful enough to provide a satisfying experience given the compute demands of our AI models.

Data never leaves the client’s device!

This is crucially important as users are increasingly concerned about protecting their sensitive information, especially in the wake of massive data scandals and security breaches such as Cambridge Analytica. Users are increasingly concerned about protecting their sensitive information. With TensorFlow.js, users can take advantage of AI without sending their personal data over a network and sharing it with a third party. This makes it easier to build secure applications that satisfy data security regulations, e.g. healthcare apps that tap into wearable medical sensors. It also lets you build AI browser extensions for enhanced or adaptive user experiences while keeping user behaviour inherently private. Visit this repository for an example of a Google Chrome extension that uses TensorFlow.js to provide in-browser image recognition.

(Top) The conventional ML architecture involves a diverse tech stack and requires coordination and communication between multiple services. (Bottom) TensorFlow.js has the potential to considerably simplify the architecture and tech stack. Everything is run within the client browser using JavaScript.

The TensorFlow.js architecture can be simplified further if a pre-trained model is available. In this case the client downloads the model and skips the training entirely. If further specialization of the model is necessary, considerable effort and training time can be saved by using extended training (transfer learning) on a pre-trained model rather than training from scratch.

Easier access to rich sensor data

Direct JavaScript integration makes it easy to connect your model to device inputs such as microphones or webcams. Since the same browser code runs on mobile devices, you can also make use of accelerometer, GPS, and gyroscope data. Training on mobile remains challenging due to hardware limitations as of early 2019. On-device training will become easier over the next few years as mobile processor manufacturers begin to integrate AI-optimized compute capabilities into their product lines.

Highly interactive and adaptive experiences

Real-time inferencing on the client side lets you make apps that respond immediately to user inputs such as webcam gestures. For example, Google released a webcam game that allows the user to play Pacman by moving their head (try it here). The model is trained to associate the user’s head movements with specific keyboard controls. Apps can also detect and react to human emotions, providing new opportunities to surprise and delight. Pre-trained models can be loaded and then fine-tuned within the browser using transfer learning to tailor them to specific users.

An image from Google’s WebCam Pacman game, where your head becomes the controller!

A demo of the pre-trained PoseNet model in action, which performs real-time estimation of key body point positions.

TensorFlow.js also has the following helpful perks:

Use of pre-trained models

Tackle sophisticated tasks quickly by loading powerful pre-built models into the browser. No need to reinvent the wheel or start from scratch. Select from a growing number available models (at this link), or even create JavaScript ports of TensorFlow Python models using this handy TensorFlow convertor tool. TensorFlow.js also supports extended learning, letting you retrain these models on sensor data and tailor them to your specific application through transfer learning. There is huge value in building on top of pre-trained models, which have often been trained on enormous datasets using compute resources typically beyond the reach of most individuals, let alone the browser. Let others do the heavy lifting, while you focus on the lighter training task of fine-tuning these models to your user’s needs.

Hardware Acceleration for all GPUs

TensorFlow.js leverages onboard GPU devices to speed up model training and inference, thanks to its utilization of WebGL. You don’t need an NVIDIA GPU with CUDA installed to train a model. That’s right -- you can finally train deep learning models on AMD GPUs too!

Unified backend and frontend codebase

TensorFlow.js also runs on Node.js with CUDA support. In this sense, it extends JavaScript’s traditional benefit of “writing once, running on both client and server” to the realm of artificial intelligence.

Requirements

A couple of notes on what to consider before going full TFJS. TFJS can use significantly more resources than your typical JS applications, especially when image or video data are involved. For example, the Webcam Pacman app uses approximately 100 MB of in-browser resources and 200 MB of GPU RAM when running in Chrome v71 on MacBook pro with Mojave v10.14.2. *(2.2 GHz Intel Core i7, 16 GB 2400 MHz DDR4, Radeon Pro 555x 4096 Mb, Intel UHD Graphics 630 1536 MB).

Best performance requires browsers with WebGL support plus GPU support. You can check whether WebGL is supported by visiting this link. Even with WebGL, GPU support may not be available on older devices due to the additional requirement that users have up-to-date video drivers.

The requirements for modern hardware and updated software to achieve optimal performance can cause user experience to vary substantially across devices. Therefore extensive performance testing on a wide variety of devices, especially those on the lower end, should be done before distributing a TFJS app.

There are a couple of strategies to deal with potential performance limitations. Apps can check for available compute capabilities and choose a model size accordingly, with a corresponding tradeoff in accuracy. A 5-10 x reduction in memory requirements can often be achieved by reducing the model parameters without sacrificing more than 20% in accuracy. Reducing the model size will also help save bandwidth in scenarios where a pre-trained model is transferred to the client’s device. Good memory management can help keep the footprint of the app low by avoiding memory leaks (e.g. with TFJS’s tf.tidy()).

Finally, nothing can beat a good understanding of the data and problem domain. Many problems in image recognition, for example, can be solved by using images of surprisingly poor resolution. Some problems can also be simplified by using conventional algorithms with lower resource requirements or by guiding the user to do part of the work, e.g. by centering a to-be detected object.

Examples

Sounds great! So how do we get started? Visit Part II of this series to learn how to build your very own TFJS applications!

Here are some more fun examples to get your creative juices flowing!

Let’s Dance! A holiday-themed interactive animation created by the Rangle.io team for December 2018.

Emoji Scavenger Hunt by Google. A fun game where you are challenged to seek out and photograph objects that look like emojis, under a time limit!

Teachable Machine by Google, where you can train a neural network to recognize specific gestures and use these to control an output image or sound.

Rock Paper Scissors by Reiichiro Nakano, a fun twist on the classic schoolyard game.

Emotion Extractor by Brendan Sudol, which recognizes emotion from facial expressions in uploaded images, and tags them with the appropriate emoji.

Brendan Sudol’s Emotion Extractor. Source: https://brendansudol.com/faces/.

In addition to games and apps, TensorFlow.js can be used to build interactive teaching tools for conveying key concepts or novel methods in machine learning and deep learning:

GAN Lab: an interactive playground for General Adversarial Networks (GANs) in the browser.

GAN Showcase: a neat demo by Yingtao Tian of a Generative Adversarial Network that ‘dreams’ faces and morphs between them.

tSNE for the Web: an in-browser demo of the tSNE algorithm for high-dimensional data analysis.

Neural Network Playground: while not technically TFJS, it was built from code that eventually became TFJS, and can be considered the browser app that started it all!

To see even more amazing projects built in TensorFlow.js, check out this showcase!

Click here to learn more about Artificial Intelligence at Rangle.

via Rangle.io | Blog http://bit.ly/2RlnoLX

0 notes

Photo

"[D] Help with Deep Learning Workstation Software configuration"- Detail: Hi all,There are many discussions on choosing best hardware configuration for a deep learning workstation,I'm searching for a good (or best or optimal... depends on how optimistic I am) software configuration for a DL workstation.That is, choosing betweenOperation system (Server or normal ?)Nvidia driver versionCUDA + CUDNN versionspython versionDocker Vs python virtual Envother things that are more easy to change later like:installing development platforms: pytorch, keras, TF, .. other...and specific version for eachThe HW workstation in mind is one with 4 to 8 TitanXp or 1080Ti GPU, Memory+storage as needed, something ordinary.In the past I had a lot of BUGS related only on missmatch between CUDA ver + TF ver + KERAS verAlso I have no real experience with Docker, but I think it can improve workflowMy thoughs for a configuration is:Ubuntu desktop 16.04 LTSI would like to work with Server version, so the GPU will not need to work for screen output, but I don't know if it will be easy to managemaybe possible to work with 18.04 LTS (here: how to install)Nvidia Driver: TItanXp / 1080TI: 410.78CUDA 9 + CUDNN 7 because Tensorflow support it out of the boxwhat if I want compatibility to older versions, can I use multiple CUDA+CUDNN versions ?what if In the future I would like to upgrade TF or CUDA+CUDNN, there will be backward compatibility ?python 3.7 - or the newest python3 available currentlyvirtual envI want to go with docker, but I'm not sure I know how exactlycan decide later.. using different virtual environment for eachThanks !. Caption by jomangy. Posted By: www.eurekaking.com

0 notes

Text

NVIDIA GPU Cloud Adds Support for Microsoft Azure

Thousands more developers, data scientists and researchers can now jumpstart their GPU computing projects, following today’s announcement that Microsoft Azure is a supported platform with NVIDIA GPU Cloud (NGC).

Ready-to-run containers from NGC with Azure give developers access to on-demand GPU computing that scales to their need, and eliminates the complexity of software integration and testing.

Getting AI and HPC Projects Up and Running Faster

Building and testing reliable software stacks to run popular deep learning software — such as TensorFlow, Microsoft Cognitive Toolkit, PyTorch and NVIDIA TensorRT — is challenging and time consuming. There are dependencies at the operating system level and with drivers, libraries and runtimes. And many packages recommend differing versions of the supporting components.

To make matters worse, the frameworks and applications are updated frequently, meaning this work has to be redone every time a new version rolls out. Ideally, you’d test the new version to ensure it provides the same or better performance as before. And all of this is before you can even get started with a project.

For HPC, the difficulty is how to deploy the latest software to clusters of systems. In addition to finding and installing the correct dependencies, testing and so forth, you have to do this in a multi-tenant environment and across many systems.

NGC removes this complexity by providing pre-configured containers with GPU-accelerated software. Its deep learning containers benefit from NVIDIA’s ongoing R&D investment to make sure the containers take advantage of the latest GPU features. And we test, tune and optimize the complete software stack in the deep learning containers with monthly updates to ensure the best possible performance.

NVIDIA also works closely with the community and framework developers, and contributes back to open source projects. We made more than 800 contributions in 2017 alone. And we work with the developers of the other containers available on NGC to optimize their applications, and we test them for performance and compatibility.

NGC with Microsoft Azure

You can access 35 GPU-accelerated containers for deep learning software, HPC applications, HPC visualization tools and a variety of partner applications from the NGC container registry and run them on the following Microsoft Azure instance types with NVIDIA GPUs:

NCv3 (1, 2 or 4 NVIDIA Tesla V100 GPUs)

NCv2 (1, 2 or 4 NVIDIA Tesla P100 GPUs)

ND (1, 2 or 4 NVIDIA Tesla P40 GPUs)

The same NGC containers work across Azure instance types, even with different types or quantities of GPUs.

Using NGC containers with Azure is simple.

Just go to the Microsoft Azure Marketplace and find the NVIDIA GPU Cloud Image for Deep Learning and HPC (this is a pre-configured Azure virtual machine image with everything needed to run NGC containers). Launch a compatible NVIDIA GPU instance on Azure. Then, pull the containers you want from the NGC registry into your running instance. (You’ll need to sign up for a free NGC account first.) Detailed information is in the “Using NGC with Microsoft Azure” documentation.

In addition to using NVIDIA published images on Azure Marketplace to run these NGC containers, Azure Batch AI can also be used to download and run these containers from NGC on Azure NCv2, NCv3 and ND virtual machines. Follow these simple GitHub instructions to start with Batch AI with NGC containers.

With NGC support for Azure, we are making it even easier for everyone to start with AI or HPC in cloud. See how easy it is for yourself.

Sign up now for our upcoming webinar on October 2 at 9am PT to learn more, and get started with NGC today.

The post NVIDIA GPU Cloud Adds Support for Microsoft Azure appeared first on The Official NVIDIA Blog.

NVIDIA GPU Cloud Adds Support for Microsoft Azure published first on https://supergalaxyrom.tumblr.com

0 notes

Link

Bright Computing, long a prominent provider of cluster management tools for HPC, today released version 8.0 of Bright Cluster Manager and Bright OpenStack. The release includes major and minor new features such as a completely rebuilt and now web-based administration interface, the addition of bursting capability to Azure, expanded container handling, and updated machine learning tools.

Like many in the HPC technology supplier landscape, Bright Computing has been aggressively striving to expand into the enterprise and supporting OpenStack in the process. Today’s 8.0 release coincides with the OpenStack Summit being held this week in Boston.

“In our latest software release, we incorporated many new features that our users have requested,” said Martijn de Vries, Chief Technology Officer of Bright Computing. De Vries continues, “We’ve made significant improvements that provide greater ease-of-use for systems administrators as well as end-users when creating and managing their cluster and cloud environments. Our goal is to increase productivity to decrease the time to results.”

Key updates in the 8.0 release, according to the company, include:

A major update to Bright View – with a new a web-based administrator interface and a new workflow that operates well on any web-based device, including a tablet, Bright View allows users to login anywhere, anytime to streamline the functions of deploying, managing and monitoring a cluster environment.

A new monitoring subsystem – the subsystem was rewritten to a simplified and more sophisticated configuration that provides metric collectors and more flexibility.

Integration to Microsoft Azure – in addition to AWS support, this new integration with Azure allows users to create virtual clusters in Azure using the Bright cluster on demand feature, and the ability to extend an on-premises cluster into Azure using the Bright cluster extension capability.

Support for OpenStack Newton – to manage bare metal, virtual machines, and container frameworks, Bright OpenStack 8.0 features improved logging of OpenStack

events, performance improvements, and Ironic support for deployment of instances on bare metal.

Integration with Mesos and Marathon – to allow cloud native workloads to be deployed on Bright clusters. Bright provides a streamlined setup process, health checks, service management, and more.

Bright View is the new name for the administrator interface replacing the old CMGUI administrator, which was a standalone desktop application. de Vries said it took three programmers roughly a full year to build.

Adding Azure bursting capability gives users a choice besides AWS. Bright supports two types of cloud bursting, explains de Vries in a brief (~40min) video examining most of the changes in the new release.

One is “cluster on demand” in which the user starts a virtualized “Bright Cluster” inside the cloud. The second – called cluster extension – is when you have an on-premise cluster that you are extending it the public cloud. “In this case, you could actually extend it into both Azure and AWS and you could have multiple zones in each one of those clouds as well. You could create a very large supercomputer just which spans across many different regions around the globe and you can even run an MPI job that spans all of those nodes,” says de Vries.

To the user, all of the nodes (on-premise or in Azure or AWS) look the same because Bright uses the same software image for on-premise and in the cloud. This simplifies a variety of tasks including authentication and workload management. “The workload management system might not even be aware the nodes are in the cloud,” de Vries. He quickly adds it is probably best to operate a ‘cluster’ in one environment at a time rather than both on-premise and in the cloud at the same time so as to avoid latency-related performance slowdowns.

Like virtually all technology suppliers Bright has rapidly added support for machine learning. Release 8.0 is no exception.

“We updated all of our existing frameworks (Caffe, Torch, Theano, and Tensorflow) to the latest versions. In case you don’t know what we do for machine learning, we provide ready to use packages for all of the Linux distributions we support. So if you are looking to get some machine learning workload up on a Bright cluster you don’t have to solve all the dependencies problems that if you were to install them manually,” says de Vries. New frameworks added include CNTK, Keras, MXNet, and caffe-mpi.

“Cafe-mpi is pretty exciting because it allows you to distribute your machine learning algorithm over multiple nodes which I believe is essential in the future as we take machine learning to scale. Right now a lot of people are running machine learning algorithms on just a single box with a whole bunch of GPUs added to it. At some point that is not going to be sufficient anymore as you want to distribute this, for example, over a low latency interconnect as InfiniBand or OmniPath. Café-mpi allows for that. It’s still a bit experimental but this is the early stage,” he says.

Likewise big data, an important component of many workloads and certainly for machine learning, also received attention in 8.0. mainly with upgrades of existing Bright capabilities. Other changes include support for BeeGFS parallel file system, expanded CephFS, improved support for GPU-based systems and workloads, and a job metrics functionality that is on by default rather the needing to be set up. Bright’s ‘what’s new in 8.0’ page.

The post Bright Computing 8.0 Adds Azure, Expands Machine Learning Support appeared first on HPCwire.

via Government – HPCwire

0 notes

Link

In this tutorial, you will learn about Install TensorFlow GPU on Ubuntu - Configuring GPU Tensorflow on Ubuntu and Guideline for installation cuda 9.0 Toolkit for Ubuntu.

Ubuntu comes with opensource ubuntu NVIDIA driver called nouveau. So first step would be disabling it. This tutorial is divided into following parts

Disabling nouveau

Install cuda 9.0 Toolkit for ubuntu 18.04 LTS

Install Cudnn 7.0

Install libcupti

Adding path of cuda toolkit

Installing Tensorflow-GPU on virtual environment.

#ai#Install TensorFlow GPU on Ubuntu#cuda installation guideline#Configuring GPU Tensorflow on Ubuntu

0 notes

Link

In this tutorial, you will learn about GPU Computing - Configuring GPU Tensorflow on Ubuntu and Guideline for installation cuda 9.0 Toolkit for Ubuntu. Ubuntu comes with opensource ubuntu NVIDIA driver called nouveau. So first step would be disabling it. This tutorial is divided into following parts

Disabling nouveau Install cuda 9.0 Toolkit for ubuntu 18.04 LTS Install Cudnn 7.0 Install libcupti Adding path of cuda toolkit Installing Tensorflow-GPU on virtual environment.

#ai#Configuring GPU Tensorflow on Ubuntu#cuda installation guideline#Install TensorFlow GPU on Ubuntu

0 notes